Jamba can be a novel architecture constructed over a hybrid transformer and mamba SSM architecture created by AI21 Labs with fifty two billion parameters, rendering it the biggest Mamba-variant designed to this point. It has a context window of 256k tokens.[12]

running on byte-sized tokens, transformers scale inadequately as just about every token should "show up at" to every other token resulting in O(n2) scaling laws, Consequently, Transformers decide to use subword tokenization to lower the volume of tokens in text, on the other hand, this leads to very significant vocabulary tables and word embeddings.

this tensor is just not affected by padding. It is used to update the cache in the correct position and also to infer

library implements for all its design (for example downloading or conserving, resizing the enter embeddings, pruning heads

This design inherits from PreTrainedModel. Look at the superclass documentation for the generic procedures the

Two implementations cohabit: one particular is optimized and utilizes rapid cuda kernels, when the opposite one particular is naive but can operate on any product!

if to return the hidden states of all layers. See hidden_states less than returned tensors for

we've been enthusiastic about the broad purposes of selective condition Room products to make Basis styles for different domains, particularly in emerging modalities requiring prolonged context including genomics, audio, and online video.

You signed in with another tab or window. Reload to refresh your session. You signed out in Yet another tab or window. Reload to refresh your session. You switched accounts on Yet another tab or window. Reload to refresh your session.

efficiently as either a recurrence or convolution, with linear or near-linear scaling in sequence length

arXivLabs is a framework that allows collaborators to create and share new arXiv functions instantly on our Site.

We introduce a variety system to structured point out Place versions, allowing for them to conduct context-dependent reasoning although scaling linearly in sequence size.

Mamba is a completely new condition Area design architecture that rivals the traditional Transformers. It is predicated on the line of development on structured website condition Room products, by having an effective hardware-mindful style and design and implementation inside the spirit of FlashAttention.

equally persons and corporations that get the job done with arXivLabs have embraced and accepted our values of openness, community, excellence, and person info privacy. arXiv is devoted to these values and only operates with companions that adhere to them.

Mamba introduces major enhancements to S4, significantly in its therapy of time-variant functions. It adopts a unique choice system that adapts structured state Area model (SSM) parameters depending on the enter.

Richard "Little Hercules" Sandrak Then & Now!

Richard "Little Hercules" Sandrak Then & Now! Andrew Keegan Then & Now!

Andrew Keegan Then & Now! Tina Louise Then & Now!

Tina Louise Then & Now! Catherine Bach Then & Now!

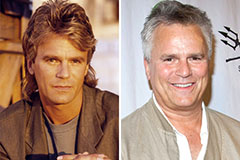

Catherine Bach Then & Now! Richard Dean Anderson Then & Now!

Richard Dean Anderson Then & Now!